Stereo Accuracy and Error Modeling

Download PDF - Stereo_Accuracy_Error_Modeling

Stereo Errors, Validation, and Accuracy

There are two kinds of stereo errors: mismatch and estimation. Mismatch is where the disparity is just wrong. Estimation errors are where the correlation match was correct but there are some errors in estimating the position of the subpixel disparity value.

The purpose of validation is to remove mismatch errors only. We recommend the use of Texture Validation and Surface Validation as the most effective combination. In general, the default values for these validation methods will work well. Validation does not affect the accuracy of the valid stereo disparity pixels. It only throws out ones that it believes to be mismatch errors.

Stereo Accuracy – Numbers

The short answer: Larger stereo masks provide better accuracy but more smoothing of the 3D surface – mask size 11 is a good compromise mask size.

We have conducted an experiment to test the magnitude of stereo estimation errors. The results are summarized in Tables 1 and 2 below. The results indicate the standard deviation of the disparity matching for estimation errors.

The numbers are in pixels. For example, using stereo mask 11, edge mask 9 with 320 x 240 resolution images, the standard deviation on disparity values was 0.11 pixels. Since 2 standard deviations should capture 95% of a Gaussian probability distribution, one can think of this as approximately ±0.22 pixels.

Table 1: Correlation accuracy results—Stereo Mask 11, Edge Mask 9

Resolution |

Correlation Accuracy |

|

160 x 120 |

0.10 pixels |

|

320 x 240 |

0.11 pixels |

|

640 x 480 |

0.10 pixels |

Table 2: Correlation accuracy results for 320 x 240 resolutions—Range of mask sizes

(edge mask was always set at stereomask – 2)

Stereo Mask |

Correlation Accuracy |

|

5 |

0.18 pixels |

|

7 |

0.18 pixels |

|

9 |

0.14 pixels |

|

11 |

0.11 pixels |

|

13 |

0.10 pixels |

|

15 |

0.10 pixels |

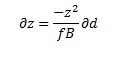

Calculating the 3D Point Errors

We model our 3D stereo error on two parameters: the calibration error (p for “pointing error”) and the correlation error (m for “matching error”). Calibration accuracy, p, can be requested from Point Grey Research for your camera by contacting Support, although almost all 640 x 480 cameras have a value of p between 0.06 and 0.08, and all 1024 x 768 cameras have a value of between 0.1 and 0.15. This value is for the maximum resolution of the camera. It should be divided by the reduction in resolution if the stereo is not being done at full resolution. (Example a 640 × 480 camera at 640 × 480 resolution would use p as given by PGR. For stereo at 320 × 240 you would use p/2).

Correlation accuracy, m, can be estimated from Tables 1 and 2.

The equations controlling XYZ determination are:

![]()

where d is disparity, (u, v) is the pixel position in the image relative to the image centre (i.e., u = col − centreCol, v = row = centreRow), B is the stereo baseline and f is the focal length. These are the equations implemented in the triclopsRCDToXYZ() family of functions.

For a given result of triclopsRCDToXYZ, you obtain (x, y, z). The tolerance in (x, y) are determined by the calibration error p. These are quite simple:

![]()

![]()

The accuracy in z is a little more complicated:

![]()

Substituting ![]() for d we get:

for d we get:

or

![]()

since m is the uncertainty in disparity. Hence for a given expected error of p and m we can calculate the errors in (x, y, z).

As an example, say we have the following 3D point from stereo done at 320 × 240 with enhanced stereo and enhanced rectification. Typical results for a 4 mm camera would be:

p |

= 0.04 |

|

m |

= 0.05 |

|

f |

= 250 |

|

B |

= 0.10 |

|

d |

= 20 |

|

(x, y, z) |

= (1.0, 0.5, 1.25) |

Then

![]()

![]()

![]()

If p and m are a single standard deviation of error in the pointing and matching error distributions, then we can reasonably double these values, as two standard deviations cover 95% of an expected distribution. Consequently:

Δx = ±0.4 mm

Δy = ±0.4 mm

Δz = ±6 mm

Your Mileage May Vary

These numbers are for a Color 640 × 480 4mm Digiclops camera. They give an indication of the magnitude of errors one can expect, but special circumstances such as different optics and different scenes will change the nature of the errors one experiences.